Headless Browser Testing Clarified

Headless Browser Testing is mostly unnecessary.

This article is one of the “IT Terminology Clarified” series.

Headless Browser Testing is a way of running browser tests without the UI (head, a term from Unix). Let’s have a look at a Selenium test execution in headless mode as well as a normal mode for comparison.

Demo: Headless vs Normal

Firstly, normal selenium test execution with browser UI.

Then the same test is run in headless mode.

As you can see, there is no Chrome browser shown during the second test execution and the test still passed. This is headless testing.

History of Headless Browser Testing

Between 2010–2015, Headless browser testing with Phantom JS was hyped highly by test architects/engineers. I tried and was puzzled about how it could possibly work. I wrote down my suggestion to avoid headless testing with Phantom JS in my book “Selenium WebDriver Recipes in Ruby”.

In 2017, Phantom JS was deprecated. According to Vitaly Slobodin, the main maintainer: “(Headless) Chrome is faster and more stable than PhantomJS. And it doesn’t eat memory like crazy. I don’t see any future in developing PhantomJS.” It turned out that those ‘we did headless testing with Phantom JS’ were just lies. (if the test automation framework is not reliable, ….)

Chrome v59 (released in June 2017) introduced its headless mode. Here I mean real headless.

How to start Chrome in headless mode?

options = Selenium::WebDriver::Chrome::Options.new

options.add_argument('headless')

driver = Selenium::WebDriver.for :chrome, :options => optionsWhy Headless?

Test execution is faster in headless mode.

How much faster in headless mode?

For the above simple test, Normal mode: 20.2 seconds; Headless mode: 18.7 seconds.

After taking out the fixed delay ( sleep 1 ) in the test script, there is a 7.9% saving with headless mode.

Is it stable for real-life test automation?

Yes, mostly.

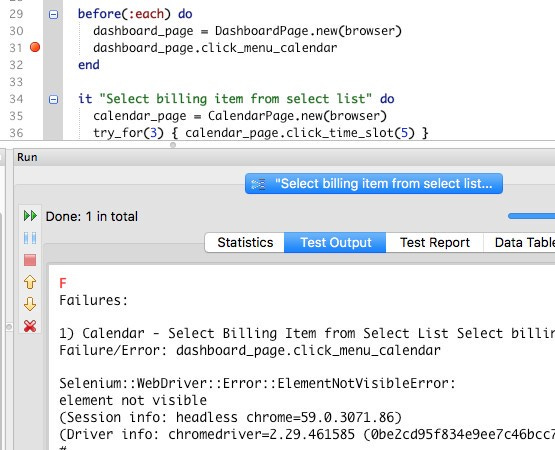

However, I did encounter a few issues with early versions of ChromeDriver and Chrome. For example, below is a screenshot of a test failure that happened only in headless mode.

These days, Selenium + ChromeDriver + Chrome (headless) are quite stable. However, from the above example, we can conclude that test execution (of the same test) might be different in headless mode.

Test Failures only in Headless execution are a pain to debug

A couple of weeks ago, one engineer showed me two test executions of the same test script: PASS for normal mode; FAIL for headless mode. She was a good test automation engineer but still failed to figure out the reason after a couple of hours before giving in. The reason: debugging test failure is very HARD.

Every time she ran in the normal mode to debug, the test passed. She took my advice and ran the test in a BuildWise CT server, in two separate build projects. After many runs, the results were consistent: PASS in normal mode; FAIL in headless mode. BuildWise showed the screenshot of the app when the test failure occurred. It helped narrow down the scope, still not clear in this case.

I couldn’t access that internal app, otherwise, I would be interested to find out the cause. Nevertheless, the lesson from this story:

Headless execution results can be different

When it happens, debugging is hard.

The simple solution: running tests in normal mode.

I rarely run my tests in headless mode

My hesitation with headless execution is simply personal.

The initial failures were due to my ahead-of-time tryout.

Google has done a good job with updates (Chrome browser and ChromeDriver), which is one of many benefits of choosing Selenium WebDriver.‘Headless testing’ reminded me of fake test automation engineers I have met.

It was quite painful to see people lying, especially those with the title ‘Engineer’. Somehow, ‘headless testing’ is associated with ‘fake test automation’ in my mind though it is no longer the case following the emergence of Chrome.

Last year, I presented test automation to a group of managers and a principal software engineer. I did a live demonstration against the app, and test scripts were in a free and open-source framework (Selenium WebDriver).

The only technical question from the principal software engineer was: “Does it support headless?” The answer was of course Yes.However, I had a bad feeling about this. After the meeting, I told a friend (who was working in the same company but a different team): “I think this principal software engineer would sabotage the test automation”.

My friend: “How could you tell from just one question?”

I replied: “Just a feeling, I hope I am wrong.”Unfortunately, my predication turned out to be true. Also, I learnt that it was this principal software engineer who designed so-called ‘an automation framework’ (based on Selenium Java with Concordion, wrong choices!) which failed badly. It was so bad and embarrassing that I even heard about it from a test manager at another company.

Let me emphasize it again, technically speaking, headless testing with Chrome is fine. The fact I added “Headless” in my TestWise tool proves my view. When there may be some cases in that tests don’t run well in headless mode, you just extract those tests out and run them in normal mode (while keeping the rest headless).

The reasons I rarely run tests in headless mode:

1. I want to see tests in execution most of the time

When developing/refining/debugging a test script, I run individual test scripts in normal mode. When a test fails, I want to see what went wrong by checking the web page in the browser (started by ChromeDriver). It would be quite frustrating to see a bunch of texts telling you that a test just failed. The error backtrace usually does not give the full picture. In other words, Chrome with UI is my default test execution mode.

When running a group of tests, I would still like to see test execution casually. You would be surprised at how many issues (such as error traces which are missed by assertions in automated test scripts) I have spotted from watching automated test executions.

2. I run all tests in a Continuous Testing server with parallel execution to greatly reduce the total execution time

The total performance gain with headless execution is only minor, about 8%. On executing an individual test, it is hardly noticeable. On executing a large suite, its help is minor as well, e.g. a saving of 10 minutes out of two hours.

Tip: There are generic ways to write faster test scripts, check out out this article: Optimize Selenium WebDriver Automated Test Scripts: Speed

I prefer running selenium tests in parallel in a Continuous Testing Server like BuildWise. Here is a screenshot of a recent build report that showed the reduction of 82.2% execution time with 7 build agents running tests in parallel.

Besides a huge time saving (can be even more by adding more build agents), there are many benefits with parallel test execution in CT, such as Auto-Retry which will eliminate a large percentage of false alarms.

When do I use headless testing?

I used the word ‘rarely’, which means I occasionally run automated tests in headless. I do that when I generate application/test data for team members or myself.

Test Automation is more than just verification. One of its many benefits is to generate test data, which I have covered briefly in this article: Benefits of Continuous Testing (Part 3: to Business Analysts). I will write a dedicated article on this later.

At one of my recent consulting roles, some team members (especially business analysts) asked me to help to generate test data after seeing my test automation execution, For example, submitting a new insurance claim application would take 3–5 mins for a business analyst or tester to do manually. Automation scripts can complete the process in under 1 min.

The team members usually message me (via Slack or MS Teams) for help. As I didn’t want a new Chrome window to pop up and affect my work, I would usually run the automation scripts in headless mode. The output, e.g. application number, will be shown in TestWise Console. Then I would just message newly generated test data IDs back.

FAQ

I acknowledge that headless is hard to debug test scripts, but my company requires to use run headless in docker. Suggestions?

As a test automation engineer, test execution reliability is above everything (otherwise no use at all). If you are comfortable with accomplishing that, no problem.

If in doubt, do whatever setup that test execution is highly reliable. Remember, a good run in the Continuous Testing server means a 100% pass of all tests (and all steps).