Practical Sensible Hiring Good Software Engineer Tip #1: Quick Screening Questions

Time saving for both parties.

In contrast to Software Engineer Hiring Anti-Patterns, this article series shares my thoughts on good hiring practices.

#1: Quick Screening Questions

#2: Reading Books Regularly (coming soon)

#3: Clear Writing (coming soon)

#4: Using keyboard Shortcuts Proficiently (coming soon)

#5: Master a Text Manipulation Language or Scripting (coming soon)

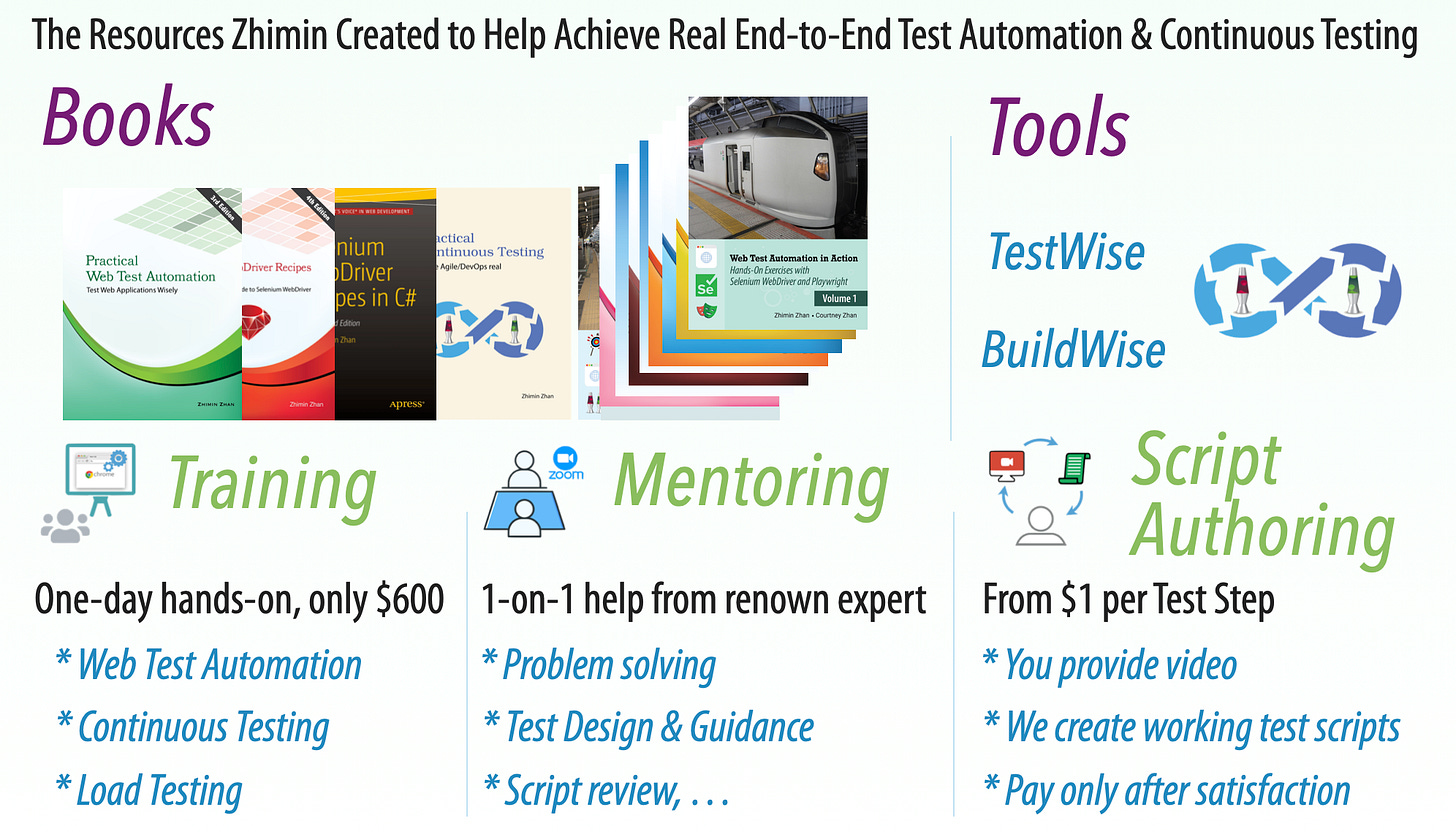

#6: E2E Test Automation skills (coming soon)

#7: Side work (coming soon)

At big tech companies, a single job posting often attracts hundreds of applications. To efficiently shortlist a small number of qualified candidates for further interviews, a standardized screening process is typically used.

The keyword of screening questions is “Quick”, as Rick (in the “Walking Dead” TV show) asked newcomers.

An experienced interviewer or examiner knows that well-crafted yet simple questions can reveal a lot about a candidate. For example, I designed four effective screening questions for hiring test automation engineers, and they proved highly effective. For more, check out the article, Four Screening Questions to Filter Out Fake Test Automation Engineers in Interviews (🥇 1st most popular article of the 127th issue of Software Testing Weekly).

Here are my views of good “Screening Questions”:

The number of questions should be limited to 5 or less.

Saving time, i.e. money. While most candidates can accept rejection, they become frustrated if it comes after they’ve invested too much time in the process. ThoughtWorks, to my knowledge, at least before 2010, serves as a poor example of this.

Clear, simple, and easy to understand.

The candidates do not need special background knowledge.

Prefer direct questions that candidates cannot evade.

Some cunning people prefer to use the strategy of "Unable to Convince, Then Confuse." A good screen question can prevent that, see the examples above.

Technical knowledge matters less than personal qualities, except when tackling a specific challenge, in which case hiring a coach or temporary contractor is the better option.

Here are the personal attributes that I pay particular attention to avoid.

Liars

Needless to say, you don’t want them in your team. However, in E2E test automation and Continuous Testing, a large percentage of people lie.

Check out the insightful story shared by renowned Agile expert Michael Feathers.Hype-Chasers

This kind of people usually don’t get the work done.Lack of common sense

Technology preferences are OK, however, be aware of people who lack common sense, such as:

- “E2E testing scripting language has to be the same as the code”. (Wrong, black box testing is totally independent of the code)

- “We only need to do limited E2E testing, as unit testing is enough” (Wrong! An extra comma in JavaScript or CSS can prevent pages from rendering, and unit testing cannot detect that. See suggestions in World Quality Report 2018: ‘The first time ever that “end-user satisfaction” is the top objective of quality assurance and software testing strategy.’)

- “When a project is falling behind schedule toward the end, hiring more people will help” (Wrong, see the classic book: Mythical Man-Month).

- “Manual testing—carrying out steps and documenting them in Excel spreadsheets—is the best practice for regression testing.” (Wrong, “the only practical way to manage regression testing is to automate it” - Steve McConnell)

- “We don’t want to use Ruby in E2E test automation because it is slow” (very wrong, the language speed matters little in E2E testing)By the way, I heard the above from real people, and you should avoid them.

Some suggested screening questions:

How frequently does your team deploy to production?

If your team isn't delivering deployable releases every sprint (as defined by Agile), what is the most critical capability that’s currently lacking?

What process do you follow to address defects reported by testers or customers?

In your view, what is the best approach to prevent defects from being discovered by end-users in production?

Can you break down the proportions of your efforts as a developer across coding, debugging, unit testing, end-to-end testing, and refactoring?

What steps do you typically take when refactoring code?

Do you believe your team is truly practising Agile methodologies? If not, what are the reasons? If you do consider it Agile, how do you avoid introducing regression defects while handling change requests and new user stories?

Few candidates can provide satisfactory answers, so it's essential to evaluate personal attributes like a willingness to learn, the ability to admit mistakes, and other relevant qualities.

Related reading: