Test Automation Camel, a metaphor that explains why most test automation attempts failed?

A metaphor provides a full picture that explains why most software projects failed test automation.

According to the IDT automated software testing survey in 2007, “although 72% stated that automation is useful and management agrees, they either had not implemented it at all or had had only limited success.” (see this article for the definition of success) The reasons given for the failure of Automated Software Testing were:

Lack of time: 37%

Lack of budget: 17%

Tool incompatibility: 11%

Lack of expertise: 20%

Other (mix of the above, etc.): 15%

As more software companies have adopted agile methodologies (and even DevOps), I think it is safe to say, >90% of software teams would now agree test automation is useful or mandatory. However, most software projects still “either had not implemented it (E2E Test Automation) at all or had had only limited success”.

Table of Contents:

· Why the 'top 4 reasons for not doing Test Automation' are wrong?

· Test Automation Camel

∘ 1. Out of Reach: Expensive

∘ 2. Learning Curve: Steep

∘ 3. First Hump: Hard to Maintain

∘ 4. Second Hump: Long FeedbackWhy the ‘top 4 reasons for not doing Test Automation’ are wrong?

Let’s examine the top 4 reasons listed by the survey candidates who wanted but failed (or didn’t even start) the test automation. These reasons sound “about right”, however, they are wrong.

Lack of time

WRONG! Automation saves time. Automated test execution is faster, typically, only 10%-30% of that is done manually. For example, on a recent project, the automated test I created shortened the execution of a typical manual test case from 1 hour to 6 minutes.

Given that regression testing needs to be performed frequently (at least once per sprint, many more if practising DevOps), surely test automation saves time.Lack of budget

WRONG! In software development, saving time generally means saving money.

Regarding initial investment, the leading test automation framework such as Selenium WebDriver is 100% free. Tools for Selenium, such as TestWise and Visual Studio Code, are either free or very low cost.Tool incompatibility

WRONG! Selenium (for web apps) and Appium (for Desktop and Mobile apps) are based on the W3C’s WebDriver standard. Test scripts in Selenium/Appium are plain text and drive the app using a standard protocol (for example, all browser vendors support WebDriver), so there are no compatibility issues.Lack of expertise

As a statement of fact, I agree. However, have you ever seen any projects seeking external help to train/coach their testers and programmers? Your answer is “No”, right? So, this is just an excuse.

If managers truly agree with the value of Test Automation and acknowledge lacking the skill/experience in the team, logically, they shall actively seek an experienced Test Automation coach (the process of engaging agile coaches is already in place) to up-skill the team. But I rarely see this kind of role in Job Ads.

So, practically speaking, this reason is WRONG too.

When I showed the four reasons to my audience (at conferences or training sessions), they tended to agree with them. However, after I listed my objections, most of them changed their mind, and I could sense that they were surprised and then puzzled.

So, what are the real reasons for many software projects don’t do test automation at all or fail badly?

Test Automation Camel

In 2007, I came up with a metaphor (in my first book) to explain why most test automation attempts failed: Test Automation Camel.

Just imagine to succeed in test automation, a man (on the left) needs to climb over a camel.

He will face the following hurdles:

1. Out of Reach: Expensive

Over a decade ago, Test Automation tools were very expensive, they only could be seen at large software companies. At ANZTB 2010 conference (in Melbourne), one Tricentis sales presentative said this in his ‘sponsored speech’: “Only cost $25,000 a license”. I could not find the price of Tricentis TOSCA on its website, this itself means something, right? Anyway, I found the price of another commercial testing tool, according to this website: “IBM’s RFT price is US$13,100”. (I reviewed RFT in 2013, it was quite bad. The free Watir and Selenium were much better)

So, “Lack of budget to purchase test automation tools” was a legitimate reason before 2005, when Watir was released. Some might argue Watir was not industry-leading (despite it being featured in the Agile Testing book and used at Facebook). But after 2011, the high cost is no longer a valid excuse at all, but rather a wrong choice, as Selenium WebDriver was available and free.

Solution: use free Selenium WebDriver, which is better than others in every way.

Traditional (and expensive) test automation tools, which often promote record-n-playback or Object Identification GUI Utility, are dying. Of course, their salesmen are still telling all kinds of stories. Let’s see a fact. Former industry-leading HP QTP has been sold to Micro Focus and was renamed UFT. Now Micro Focus released “UFT Developer” to support Selenium WebDriver. (Micro Focus has been a ‘selenium’ level sponsor listed on the Selenium website for a few years. It has been removed since last year, however, you still can find Micro Focus in the sponsor list for the Selenium conference, like this one).

Back to our topic, test automation has been accessible, for free, to everyone since 2011.

Related reading:

2. Learning Curve: Steep

Solution: Attend a proper Selenium training by a real test automation coach.

Selenium WebDriver is the easiest-to-learn Web Test Automation, yes, it is true, far easier than any of the others.

“How long will it take to learn Selenium?”, some might wonder. My answer: 1 day. In my 1-day Web Test Automation with Selenium WebDriver training, I cover more on test design and maintenance. Once, one company expressed doubts about our training, “only one day for Selenium WebDriver?” I replied: “Yes, in fact, learning (not mastering) Selenium WebDriver is only a small part of our training, please see the info pack”. Why?

Selenium WebDriver follows intuitive syntax “Find a web element, and perform an operation”.

An example:driver.find_element(:id, ‘login-btn').clickWebDriver was defined by some of the best automation experts at W3C, after many rounds of revisions.

Many learn Selenium WebDriver in many wrong ways: such as using record-n-playback and object-map, custom ‘framework’, in the wrong language such as Java, C# and JavaScript, in a wrong syntax framework such as Gherkins, test design that is harder to maintain, using complex (often expensive) tool…, etc.

My daughter started writing raw Selenium tests when she was 12, and so could your child. Because learning Selenium is just that easy if taught properly. Seeing is believing, see the article below.

Related reading:

3. First Hump: Hard to Maintain

I think >90% of test automation attempts failed here.

Commonly, test engineers were quite excited after being able to create automated tests that perform testing. But they don’t know two things:

Test maintenance is much harder than test creation, as a result, it requires far more effort.

Generally speaking, test automation engineers lack the knowledge or experience for this. Therefore, old tests start to fail as the application changes frequently. Invalid tests are worse than no tests.

Solution: Functional Test Refactoring

To overcome this challenge, the team (with management’s support) need to switch the focus of creating test scripts to keep all test scripts valid all the time. That’s a mindset change. It is obvious (after being pointed out), however, it is easy to make the change.

Here I will focus on the techniques that will help you achieve the goal:

Maintainable Automated Test Design

Design automated test scripts that are easy to maintain. The key concept is being intuitive.Functional Test Refactoring

You will need to make a lot of changes to existing test scripts to keep them up to date. Efficiency is the key. Functional Test Refactoring is a process of quick, reliable, and efficient way to improve the test design of your automated test scripts.

In fact, that is the core content of my book: “Practical Web Test Automation” and 1-day Selenium training (as I said early, Selenium WebDriver is extremely easy to learn).

Related reading:

Optimize Selenium WebDriver Automated Test Scripts: Maintainability

Optimize Selenium WebDriver Automated Test Scripts: Readability

4. Second Hump: Long Feedback

Once the test suite reaches a certain size (30+), the team will start to realize the wait is becoming tolerable. Over 95% of the remaining software teams would fail here.

For example, if the average execution time for one test is 1 minute, the wait will be half an hour for a 30-test suite. That was long after the team started realizing the benefits of Shift-Left Testing. You might have heard of the term “10-minute build” in Agile.

Solution: Continuous Testing

The only practical solution is to run all tests in a Continuous Testing Server, which distributes tests to multiple build agents to run them in parallel.

However, many companies got it wrong. Typically, so-called CI/CD (or DevOps) engineers try to run automated UI tests in the existing CI server, such as Jenkins and Bamboo. Of course, there can be only one result: failure.

Since 2014, I have started noting down the best pass rate in software companies’ CI servers, typically Jenkins. Of course, I ignored a small suite with just a few simple login tests. The top figure is 48%, i.e., over a month or so, the best build in CI Server: 48% of automated UI tests passed last night. The threshold for real agile projects, in my opinion, should be > 95%.

Below is the build history for WhenWise (one of my apps). The green bar means 100% pass of 500+ E2E Selenium tests. Immediately after each green build, we push the build to production.

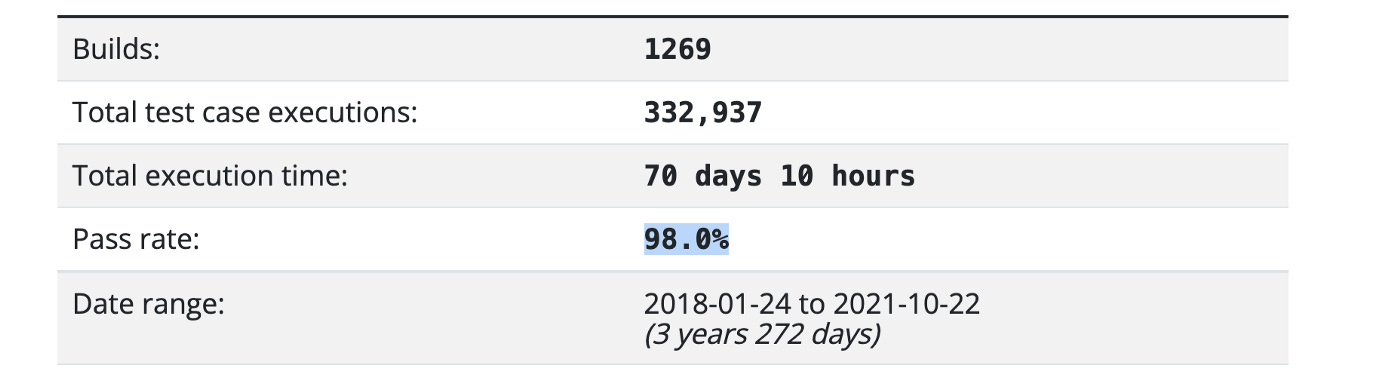

Over 3.5 years, the average test pass rate is 98%.

The reasons are obvious:

Those CI/DevOps engineers never experienced real E2E regression testing in their whole careers.

Execution of Automated E2E tests is vastly different from programmers’ unit tests.

For motivated engineers who want to succeed, my advice is to dump Jenkins or like. It is the wrong tool for the work. Use a dedicated Continuous Testing server. I recommend this great presentation: “Continuous Integration at Facebook”.

The Facebook test lab (the above) enables quick feedback.

Getting a green build for a reasonable UI test suite (e.g. >75) is not easy. For example, to get a green build (passing all tests) for my app WhenWise, each of every 28,000+ test steps (equivalent user operations, e.g. click a button or assertion) must pass.

WHENWISE TEST STATS+------------+---------+---------+---------+--------+

| TEST | LINES | SUITES | CASES | LOC |

| | 26049 | 335 | 558 | 20416 |

+------------+---------+---------+---------+--------+

| PAGE | LINES | CLASSES | METHODS | LOC |

| | 9863 | 181 | 1621 | 7449 |

+------------+---------+---------+---------+--------+

| HELPER | LINES | COUNT | METHODS | LOC |

| | 819 | 5 | 61 | 634 |

+------------+---------+---------+---------+--------+

| TOTAL | 36731 | | | 28499 |

+------------+---------+---------+---------+--------+I have managed to achieve that almost every day (when there are changes to my apps: TestWise, BuildWise, ClinicWise, SiteWise, WhenWise and TestWisely). How? please check out my book: “Practical Continuous Testing, make Agile/DevOps real”.

Related reading:

Summary

Let’s review the four stages (or challenges):

Before starting, some only see “expensive cost”. Some give up.

After starting, some quickly find it hard to learn. Some give up.

The team members started developing tests and were quite motivated (seeing execution in a real browser) initially. Soon, the team finds it harder and harder to keep the test scripts up to date, as the application changes (which is normal, more often in agile projects). Most teams give up here.

After overcoming the above 3 challenges, the team can develop and maintain test scripts. However, growing tests means a longer execution time. This will have a lot of negative impacts on the team. For example, what shall programmers do during the wait? Most software companies have no experience in setting up a parallel testing tab to run a large number of UI tests in a CT (please note, not CI) server. So, most of the remaining teams failed here.

Based on my estimation, < 0.1% of software teams can overcome all four challenges (check against the AgileWay Continuous Testing Grading).

Astute readers might have figured out why I used the Camel metaphor. When a software project starts an attempt at test automation, the team don’t see the whole picture. Poor test engineers/managers without this knowledge would see one harder challenge after another one, which seems no end. This makes test automation success (or real Agile/DevOps) even rare. Engineers stopped at different stages, i.e. they have memories of only failures.

Executives have low expectations, they thought: “we have a large manual testing team, anyway. Test automation attempts every 3 or 4 years is just to make us sound more ‘agile’ ”.

Engineers thought “automated E2E test automation” was impossible, or looking for ‘snake oils’.

As you can see, with that mindset, their test automation attempts failed on the first day.

I rescued many test automation attempts (and therefore, the software projects). People were amazed at how quickly and easily I turned things around. To their surprise, the formula is incredibly simple (and costs little):

using raw Selenium WebDriver + RSpec (Ruby)

one-day general test automation training for testers and programmers, and other interested members such as business analysts, architects and managers.

start writing real test scripts for work from the 2nd day, under my mentoring

run all automated tests in a Continuous Testing server such as BuildWise, as regression testing, multiple times a day.

keep all tests valid by the end of the day. If not, make it first thing the next day’s morning. Fixing broken builds is the top priority of the team.

I created the ‘Test Automation Camel” metaphor in 2007, it remains valid today.