Testing Pyramid Clarified

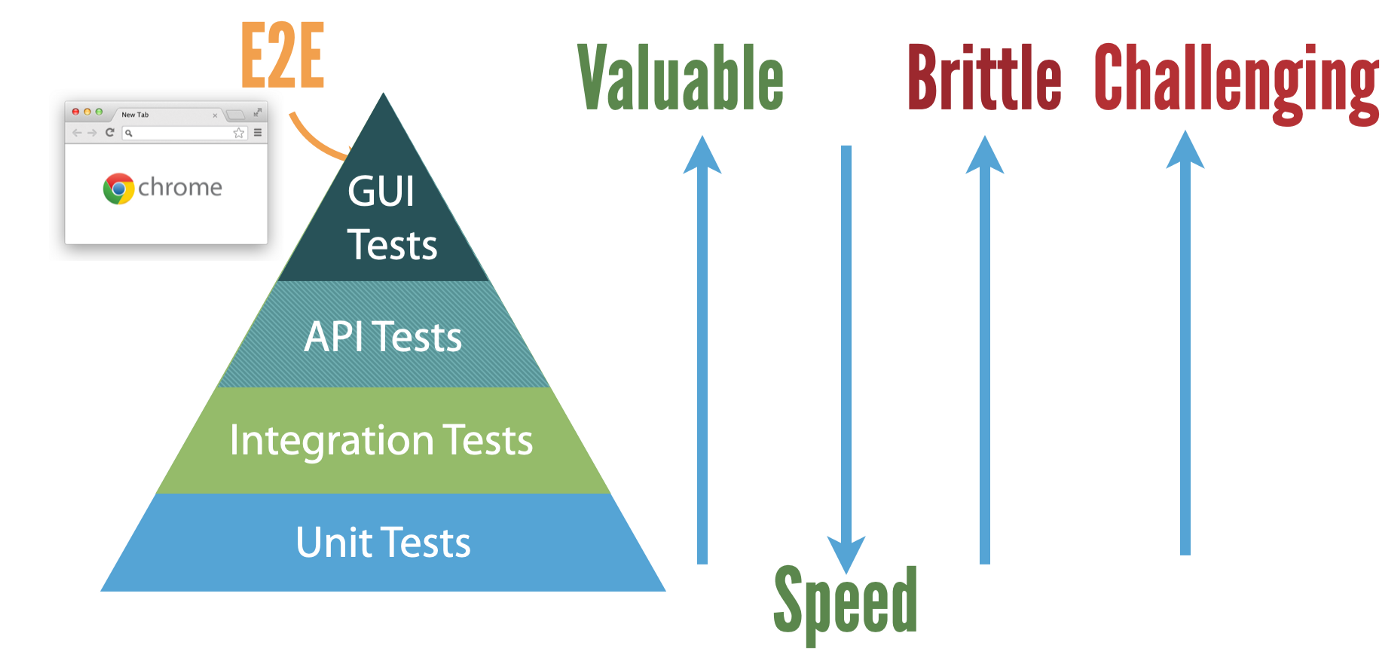

Comparison of four types of automated testing in the Testing Pyramid

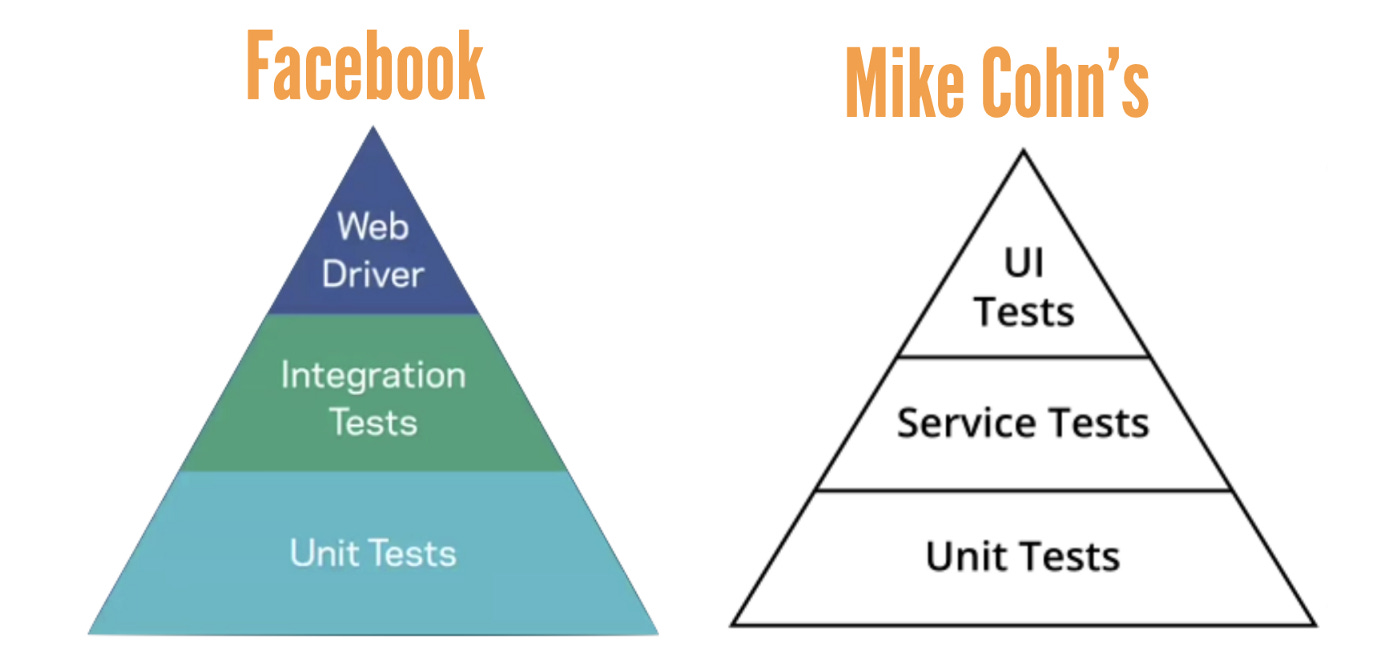

Many software professionals have heard of the “Testing Pyramid”, and there are many versions.

Testing Pyramid (automation)

Below is the testing pyramid at Facebook (from this great presentation: “Continuous Integration at Facebook”).

A quick explanation of the three tiers:

WebDriver (UI: web or mobile)

Automated End-to-End tests. Facebook use WebDriver (Selenium WebDriver for Web, and Appium WebDriver for mobile) for its End-to-End testing. For web testing, you see actions in browsers.

(black-box testing)

“For all of our end-to-end tests at Facebook we use WebDriver, WebDriver is an open-source JSON wired protocol, I encourage you all check it out if you haven’t already. ” — Katie Coons, a software engineer at Product Stability, in “Continuous Integration at Facebook”

Integration Tests

verify two parts of the system coming together(white/black-box testing)Unit Tests

white box testing is performed by programmers at the source code level, specifically, at individual methods.

Many people used the term unit testing wrongly, check out my other article: Unit Testing Clarified.

This Facebook presentation was created in 2015, and since then, the demand for API testing has grown quickly (due to Micro-services). How does it fit in the Testing Pyramid? Here is my understanding:

I will share my understanding of these four types of testing on:

Value

Count

Speed

Brittleness

Challenge

from the bottom of the pyramid up.

Valuable: low => High

All automated tests, if valid, are valuable. The most valuable one is clearly the top tier (implied in the Pyramid anyway): End-to-End UI testing. The reason: if a software team has comprehensive automated End-to-End UI regression testing, “Release Early, Release Often” is possible. (CIOs really wish for that! By the way, that’s real Agile or DevOps)

“Facebook is released twice a day, and keeping up this pace is at the heart of our culture. With this release pace, automated testing with Selenium is crucial to making sure everything works before being released.” — DAMIEN SERENI, Engineering Director at Facebook, at Selenium 2013 conference.

No matter how good a software team is doing the other three types of testing, a software team dare not push updates to production daily without the top tier: comprehensive End-to-End Testing via UI.

Besides QA, Automated E2E UI tests provide many other benefits, such as:

greatly boost the customers’ confidence in the product

safeguard on later maintenance work

create test data for the team members, especially business analysts

greatly shorten the bug-fix cycle

…, etc

Test Count: Large => Small

This is clearly indicated in the Pyramid.

Below is a guide (purely based on my observation, not scientific at all) of test case count for a typical medium-complexity web app development.

unit test : 2000

integration test: 500

API test : 200

GUI test : 100Here I just provide a rough ratio for a good agile project. In reality, many software projects have 0 automated UI tests that run reliably and frequently. Quite often a so-called ‘agile’ team claims running automated end-to-end tests nightly in Jenkins/Bamboo, but in reality, with over 50% failure rate and no one cared about the test results. This is faking test automation, totally useless.

Execution Speed: Fast => Slow

For simplicity, I use the following as a guide (just a guide) to compare the execution speed among four types of automated testing.

unit test : 0.01 s

integration test: 0.1 s

API test : 1 s

GUI test : 100 sSome programmers might say: “my unit tests take much longer than that”, the most likely reason is those were not real unit tests, please check out this article: “Unit Testing Clarified”.

For Unit and Integration tests, it is possible for programmers to run a test suite in a programming IDE (such as IntelliJ IDEA). Most teams set up a Continuous Integration server, such as Jenkins or Bamboo, to run the whole suite of unit and integration tests.

For API and GUI tests, we shall run them in a Continuous Testing server, such as BuildWise or Facebook’s Sandcastle. Please note, not CI servers. The reason is that those tests took much longer (especially UI tests) as they were more fragile, compared to unit tests. (I will cover this shortly)

Execution stability: reliable => brittle

Automated tests are meant to run frequently, not throwaway after running once. (the result at many software teams, at least was not the initial intention). As we know, changes are constant in software development. Accept that, otherwise you are in the wrong industry. The changes happen more frequently in Agile projects.

The execution reliability of automated tests decides the maintenance efforts. Generally speaking, a test with more dependencies will be more brittle.

unit test : no dependencies, using Mocks

integration test: one dependency

API test : multiple dependencies and server

GUI test : multiple dependencies, server and browserAutomated GUI tests are known for being brittle, i.e., easy to break. For example, JavaScript and AJAX are widely used in modern web apps, many factors might affect test failures:

server load (slower handling one AJAX request)

the client machine (which runs the browser) started a virus scanning in the background

infrastructure issues

test data is no longer valid

browser crash

…

To give you a perspective, to get a green build on WhenWise’s automated regression suite (consisting of 545 raw Selenium WebDriver tests).

Each of every 28000+ test steps (e.g. user operations, such as clicking a link or asserting a piece of text) must pass!

+------------+---------+---------+---------+--------+

| TEST | LINES | SUITES | CASES | LOC |

| | 26091 | 335 | 558 | 20451 |

+------------+---------+---------+---------+--------+

| PAGE | LINES | CLASSES | METHODS | LOC |

| | 9863 | 181 | 1621 | 7449 |

+------------+---------+---------+---------+--------+

| HELPER | LINES | COUNT | METHODS | LOC |

| | 819 | 5 | 61 | 634 |

+------------+---------+---------+---------+--------+

| TOTAL | 36773 | | | 28534 |

+------------+---------+---------+---------+--------+Implementation Challenging: easy => hard

After understanding the “brittleness” of those four types of automated testing, we will know that Automated UI Testing is the hardest. Many “senior software engineers” look down on testers (including automated testers). This is wrong but unfortunately common. This is because they are just mediocre programmers, like I was before 2005 (when I had been a senior Java contractor for years, before learning test automation).

“In my experience, great developers do not always make great testers, but great testers (who also have strong design skills) can make great developers. It’s a mindset and a passion. … They are gold”.

- Google VP Patrick Copeland, in an interview (2010)“95% of the time, 95% of test engineers will write bad GUI automation just because it’s a very difficult thing to do correctly”.

- this interview from Microsoft Test Guru Alan Page (2015)

Some might argue the above experts are referring to people, not necessarily mean the Automated UI testing. OK, here are two more quotes.

“Automated testing through the GUI is intuitive, seductive, and almost always wrong!” — Robert Martin, co-author of the Agile Manifesto, “Ruining your Test Automation Strategy”

“Testing is harder than developing. If you want to have good testing you need to put your best people in testing.”

- Gerald Weinberg, in a podcast (2018)

As you can see, there are two extreme views on Automated UI testing: very easy or mission-impossible. In my opinion, it is quite achievable if following the guidance of a real test automation coach.

Beware of ‘Testing Pyramid’ talks by fake agile consultants

I have met several fake test automation consultants / agile coaches who liked to talk about the ‘Testing Pyramid’ for months.

A few years ago, the agile transform team in a large financial company engaged an external Test Automation consultant from an agile consulting company. The team has been working on a road map to implement automated UI testing for nine months! When the ‘agile transformation’ manager said this in front of me, I was shocked.

Later, someone suggested that ‘test automation consultant’ talk to me. We had a chat at a coffee shop. I told him that a real test automation engineer must implement a few tests and run them in a CT server on the first day. After that, he had been avoiding me (taking another lift if seeing me coming). But I did see his multiple slides and confluence pages on ‘Testing Pyramid’. Be aware of fake test automation consultants like him.

Question: Given Automated UI Testing is so valuable (top of the Pyramid), why is it rarely done in software projects?

The short answer is it’s Hard. Of course, there is more to it. Wouldn’t IT geeks like difficult challenges? There are a lot of human factors and so easy to make wrong decisions. Furthermore, once a bad decision is made, it is very hard to acknowledge and correct it, because reverting back to usual manual testing would avoid embarrassment.

I have covered these topics in several other articles (links provided at the bottom). Here, try to answer these two questions.

If you are a CIO/CTO, will you pay a good Test Automation Engineer 5 times the salary of a senior software engineer?

I would, happily. Because they are so rare, also they are doing much more important and challenging tasks. If you don’t believe me, read this article, “The Software Revolution Behind LinkedIn’s Gushing Profits”, by Wired.

“Much of LinkedIn’s success can be traced to changes made by Kevin Scott, the senior vice president of engineering and longtime Google veteran lured to LinkedIn in Feb. 2011” — Wired, 2013

See, even for LinkedIn, a tech giant in Silicon Valley had to ‘Lure’ a top guy who helped implement the Continuous Testing process at LinkedIn.

2. Even if, somehow, a company is lucky enough to have access to a real test automation coach. The coach asked you to drop certain technologies, would you change?

For example, A software company has been trying to run SpecFlow (C#) tests in Jenkins. There are three mistakes:

C# is not a scripting language

SpecFlow Gherkin syntax creates a lot more maintenance effort, unnecessarily

Jenkins is a CI server, thus not suitable for running automated E2E tests

Another very common situation, a software team consisting of JavaScript programmers tried to implement automated E2E testing using Cypress.

Now, a real test automation coach suggested a well-proven approach: Selenium-WebDriver (as listed in Facebook’s testing Pyramid) with RSpec, and running in a CT server (note: Facebook implemented its own Sandcastle CT server, not CI servers such as Jenkins or Bamboo)

How many companies will actually listen? The wise ones will, but they are rare.