The importance and value of Continuous Testing (automated end-to-end UI regression testing) from an observer's perspective

From the Sidelines: Understanding the Value of Automated UI Regression Testing

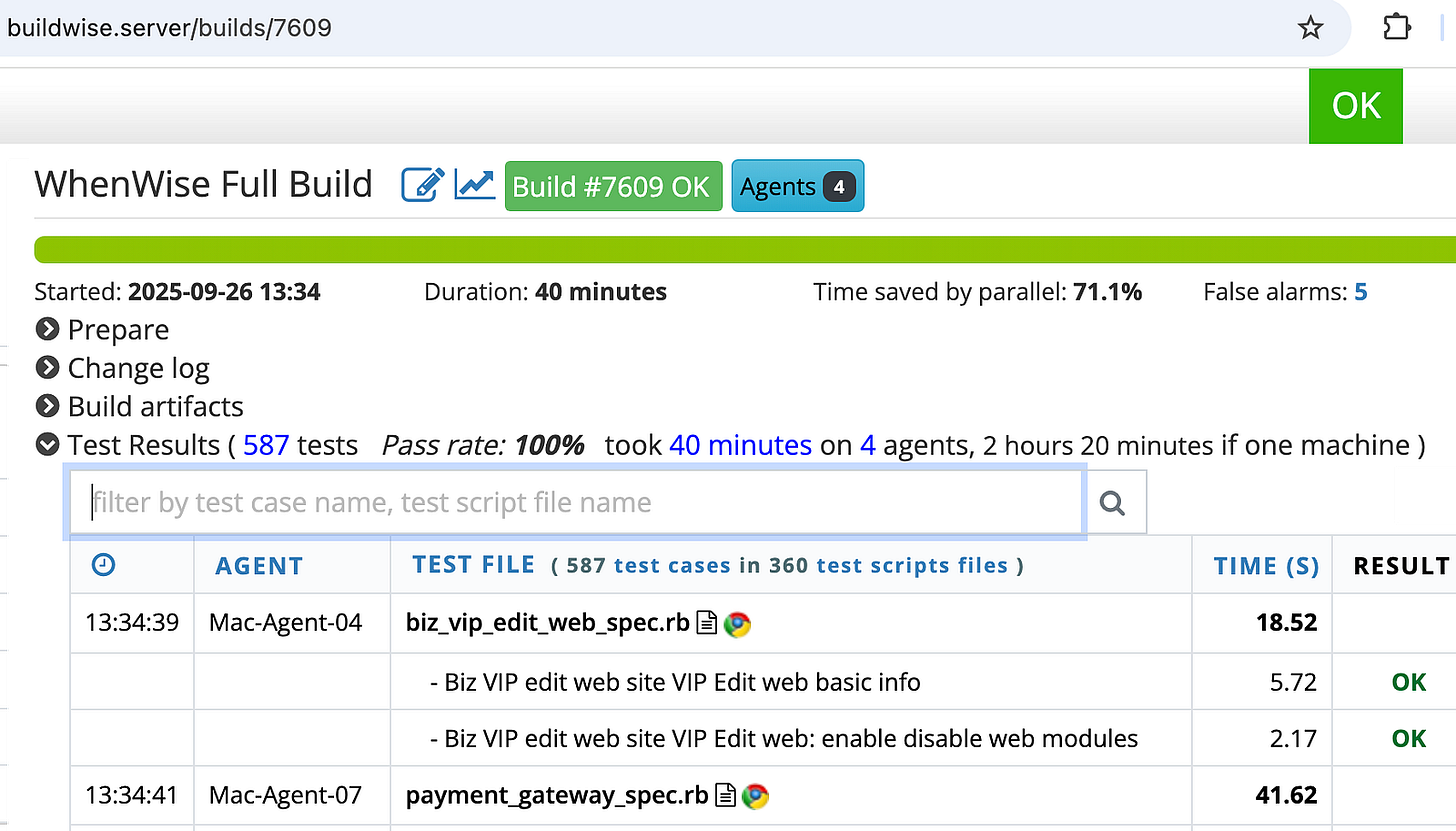

Below is a test report of automated regression testing run (on BuildWise CT server) for one of my father’s side projects yesterday. He occasionally shares these in the Agile Way newsletter.

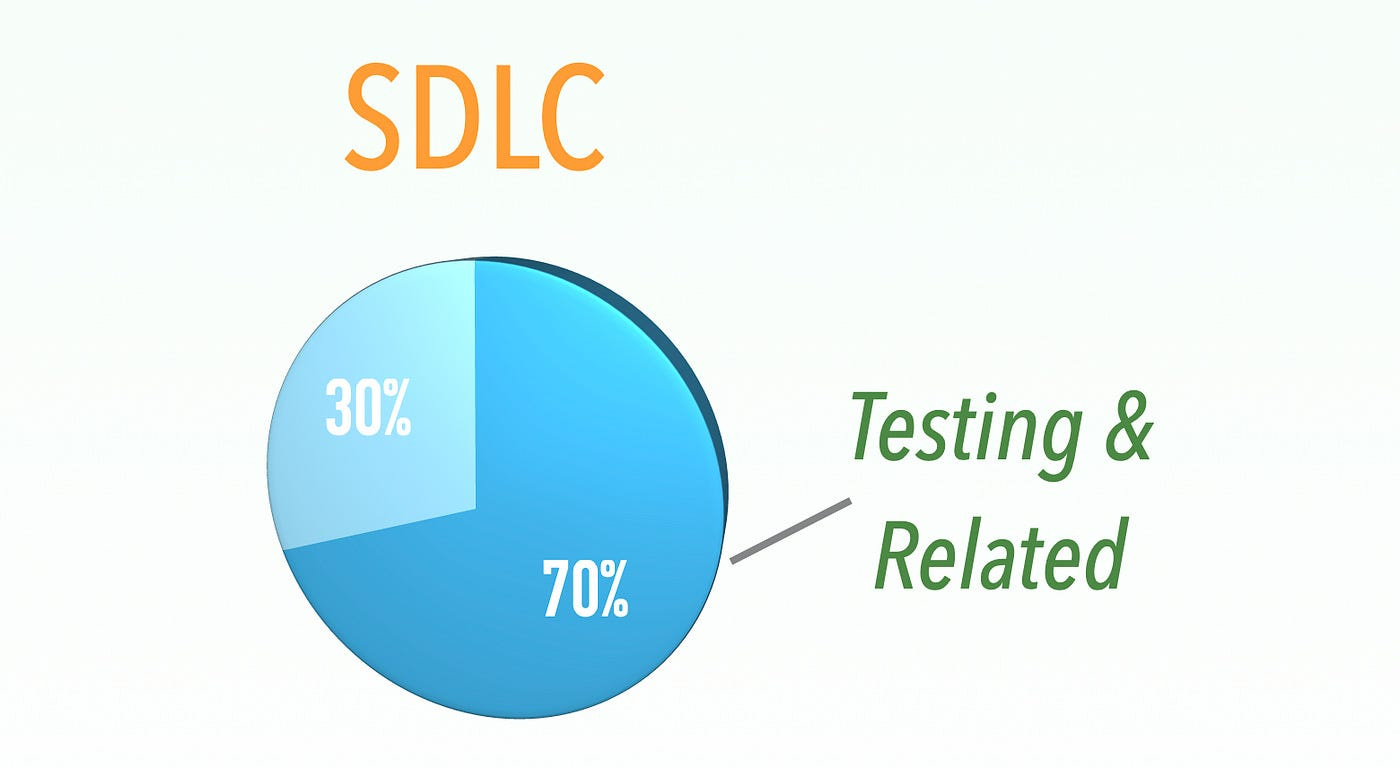

When asked about the importance of automated end-to-end (UI) regression testing, my father usually responds: ‘It’s the most important and valuable practice in software development — unless it’s a disposable, one-time-use app.’ He even quatified that with this article, “~70% of SDLC Effort is on Software Testing and Related Activities”.

Some software professionals might be shocked with disbelief. In this article, I will assert that from an observer.

My father has been working on his side-hustle web apps since 2012 (though his first project, TestWise — a testing IDE — dates back to 2006, when I was too young to remember). Because he worked on these apps at night and on weekends — while holding a full-time job during the day — I unintentionally observed how he developed software for over a decade. Most of his time was actually spent using TestWise and BuildWise, compared to the time he spent in his coding IDE, TextMate.

Before I became a software engineer, I thought my father’s rapid development — often delivering customer-requested features by the next day — was just better than normal. In fact, his productivity far far exceeded typical industry standards. The secret is, of course, he really does Agile, devoted to automated E2E regression testing.

Here, with permission, I used two automated regression testing runs he did to illustrated. As shown earlier, this regression testing run of 587 Selenium suite passed (40 minutes execution time with 4 BuildWise Agents, followed by a production deployment, a usual for him).

Some facts:

+------------+---------+---------+---------+--------+

| TEST | LINES | SUITES | CASES | LOC |

| | 30585 | 378 | 627 | 24070 |

+------------+---------+---------+---------+--------+

| PAGE | LINES | CLASSES | METHODS | LOC |

| | 11346 | 210 | 1852 | 8538 |

+------------+---------+---------+---------+--------+

| HELPER | LINES | COUNT | METHODS | LOC |

| | 931 | 5 | 68 | 723 |

+------------+---------+---------+---------+--------+

| TOTAL | 42862 | | | 33331 |

+------------+---------+---------+---------+--------+There are 33331 lines of test scripts; a green run means each of the 30,000+ test steps must pass.

Some might wonder, “This does not necessarily prove the effort was useful?”

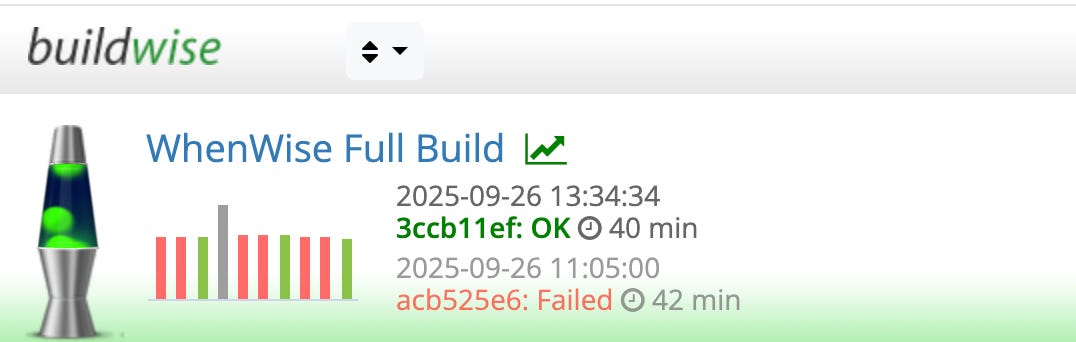

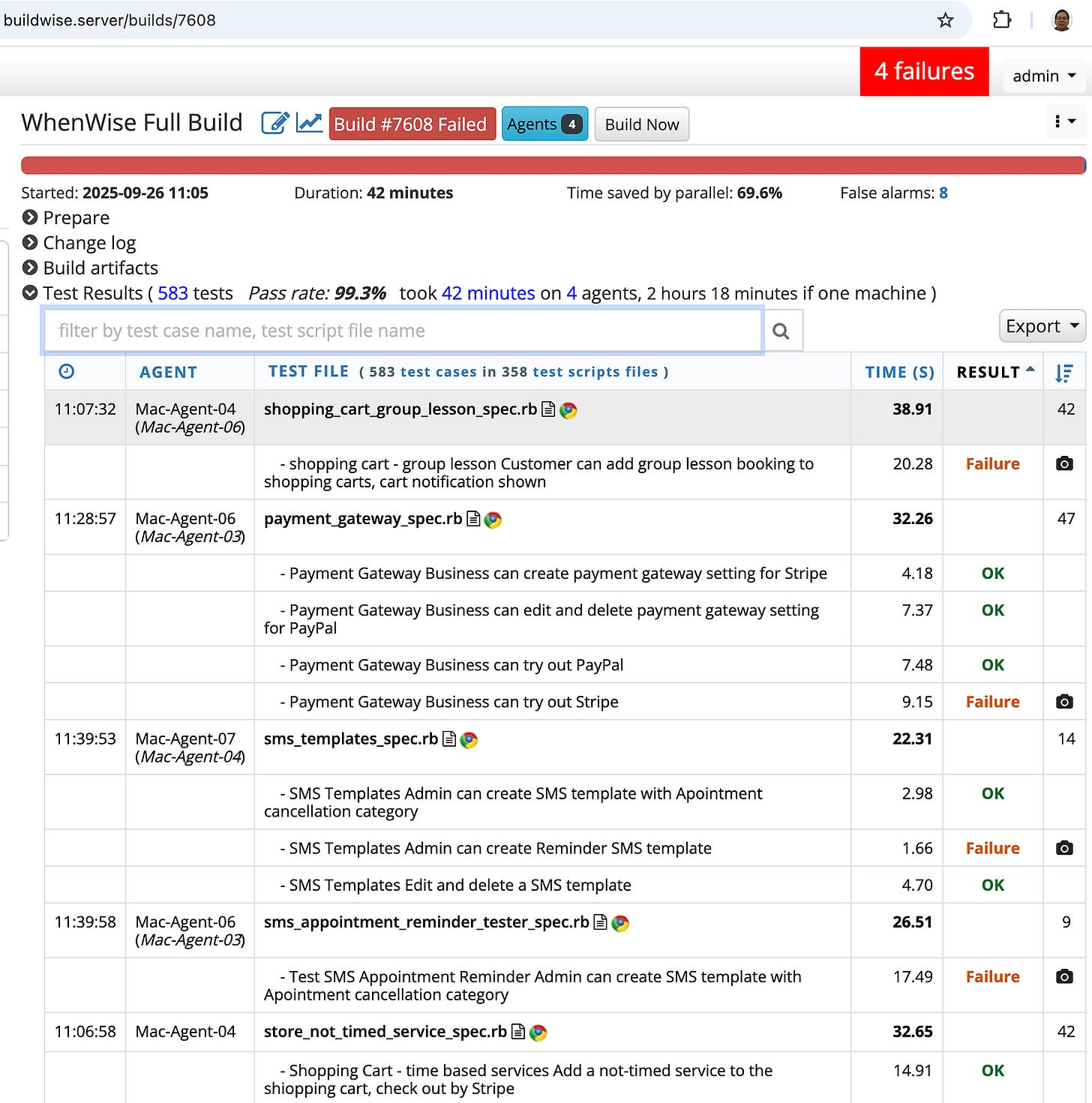

There was a previous run that failed on that day, about 2.5 hours ago.

Four automated tests failed, i.e., his recent check-ins caused it.

Yes — even for an international-award-winning programmer like my father, code check-ins sometimes introduced regression errors that affected existing customers. Keep in mind, this is a software side-hustle project: there were no junior developers, misunderstandings, or merge conflicts.

Now imagine how many more regression errors occur in typical ‘agile software teams’. Without comprehensive end-to-end regression testing, these errors often go unnoticed, eventually becoming costly defects, compromises, and technical debt. That’s why development productivity in most teams is generally much slower (& low-moraled) compared to my father’s.

Astute readers might have noticed the 2.5-hour gap. Yes, he fixed all four defects during that time, had lunch, and another a comprehensive full regression testing. For more, check out this book: “Practical Continuous Testing: make Agile/DevOps real”.