Web Test Automation Clarified. Part D: Combined

Putting it all together - misconceptions and pitfalls in web test automation.

In earlier articles of this series, I explained the meaning of each term in Web Test Automation:

Web → open, standard, and easy to understand.

Test → objectivity and independence.

Automation → speed, visibility, objectivity, and cost-effectiveness.

Few disagree, right?

However, when the three are combined into Web Test Automation, much of that meaning is often distorted. Odd practices counter to the core principles of Web Test Automation have emerged — and are often widely accepted. For example:

1. Senior Software Engineers decide which automation test framework and scripting language to use.

This is against the “independence” in “Test”.

Software testing exists to verify the work of developers. End-to-end testing, in particular, validates the application from the end-user’s perspective. In other words, this is not the developers’ playground. So why should testers be forced to follow developers’ mandates on frameworks and tools? That seems absurd, doesn’t it?

There are even ‘test automation’ frameworks that publicly claim “developer-loved”.

Shouldn’t the slogan be changed to ‘Tester-loved’ instead?

2. Choose a proprietary automation framework such as QTP and Cypress.

This is against the “standard” in “Web”.

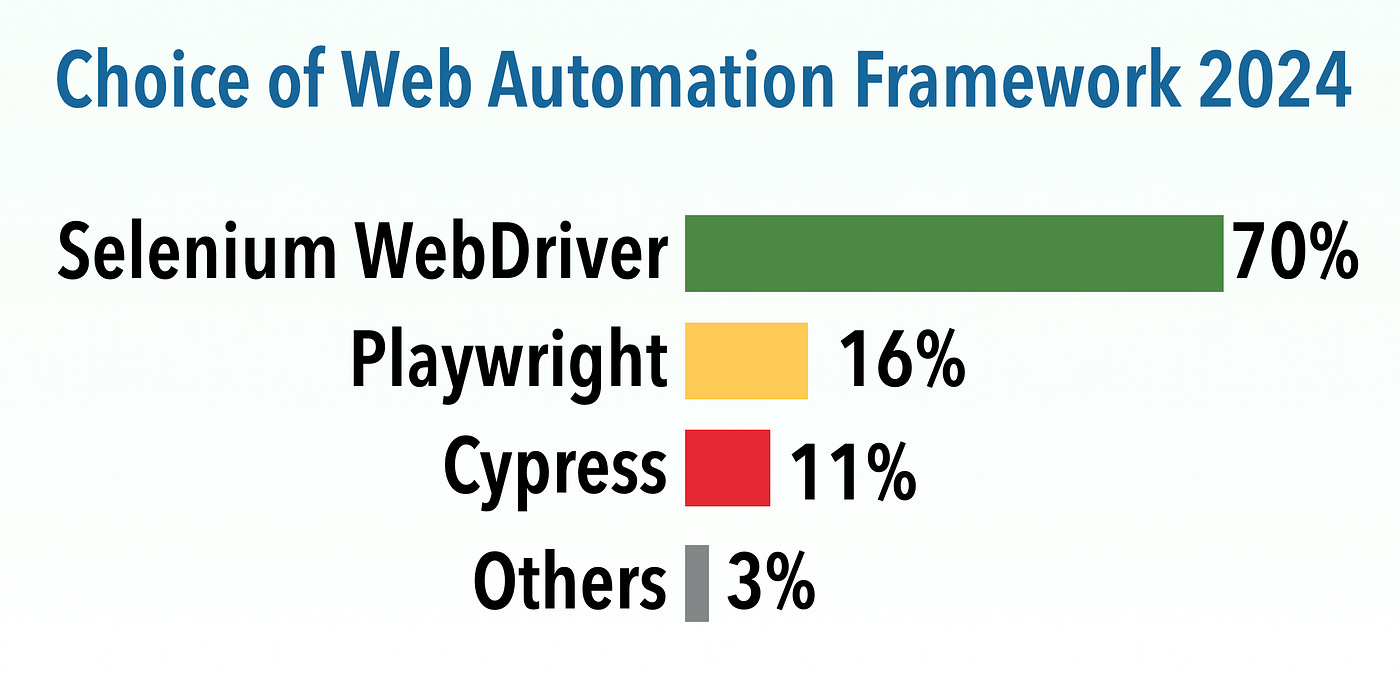

Web technologies are defined by the W3C, and WebDriver itself is a W3C standard for automating web elements in a browser. Moreover, every browser vendor supports WebDriver by providing its own driver. So shouldn’t Selenium WebDriver be the default — if not the only — choice for Web Test Automation?

I’ve seen my father use Selenium WebDriver for over a decade with remarkable consistency — super reliable and highly productive. Personally, I find it both easy to learn and a pleasure to use.

I’m not saying that every web development team must use Selenium WebDriver, but with such a high-quality, free, and standards-based option, shouldn’t the burden of proof fall on those who choose something else? And yet, in reality, that rarely happens.

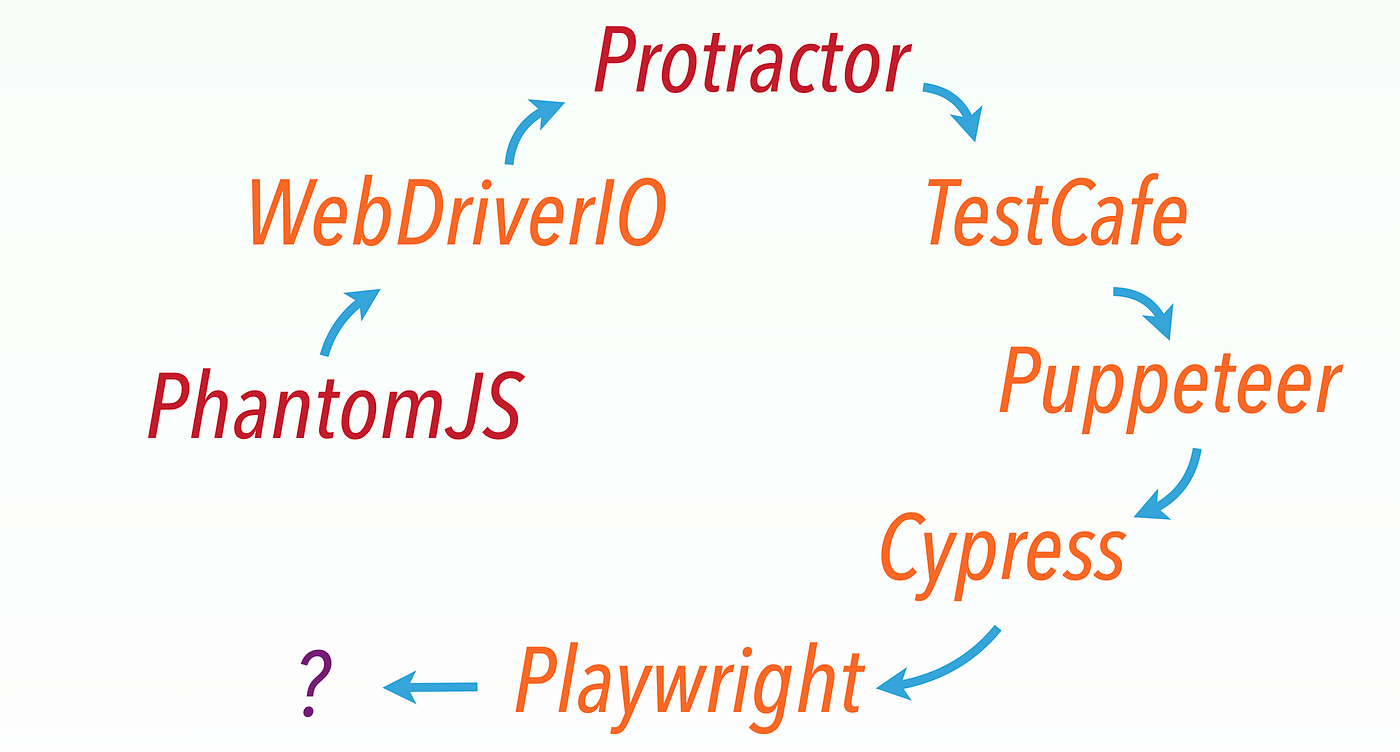

In practice, many software companies have been stuck in a cycle of failure, switching between different web automation frameworks for years.

In late 2024, two independent surveys reported similar results: Selenium WebDriver is Still the Best Web Test Automation Framework in 2024.

3. End-to-end test automation is rarely visible at many so-called ‘Agile’ Teams.

This is against the inferred visibility in “Automation”. I like watching “How is Made” and “Inside the Factory” documentaries, where the automation and production pipelines are fully visible.

(The video my father took when we were visiting ISHIYA Chocolate Factory, Japan. My father’s article: End-to-End Test Automation is Cool, Visible, Highly-Productive, Real & Useful, Challenging and Fun!)

At many software teams, despite being self-labelling ‘Agile’, UI test automation is hardly seen.

4. End-to-end test automation should provide fast feedback.

This is against the “fast” in “Automation”, in two meanings.

Web technologies have remained largely unchanged for more than two decades, and from a technical testing perspective, all websites are essentially the same. This means a skilled end-to-end test automation engineer should be able to develop a working automated test for a new business scenario quickly.

Yet, in practice, many software professionals feel that creating new automation tests takes far too long.

Fast feedback is another critical factor. For example, two of my father’s larger applications — ClinicWise and WhenWise — each have over 600 user-story–level Selenium tests. By running them on the BuildWise CT server with parallel execution (about five build agents on Mac Minis), a full round of regression testing usually takes only around 40 minutes.

Related reading:

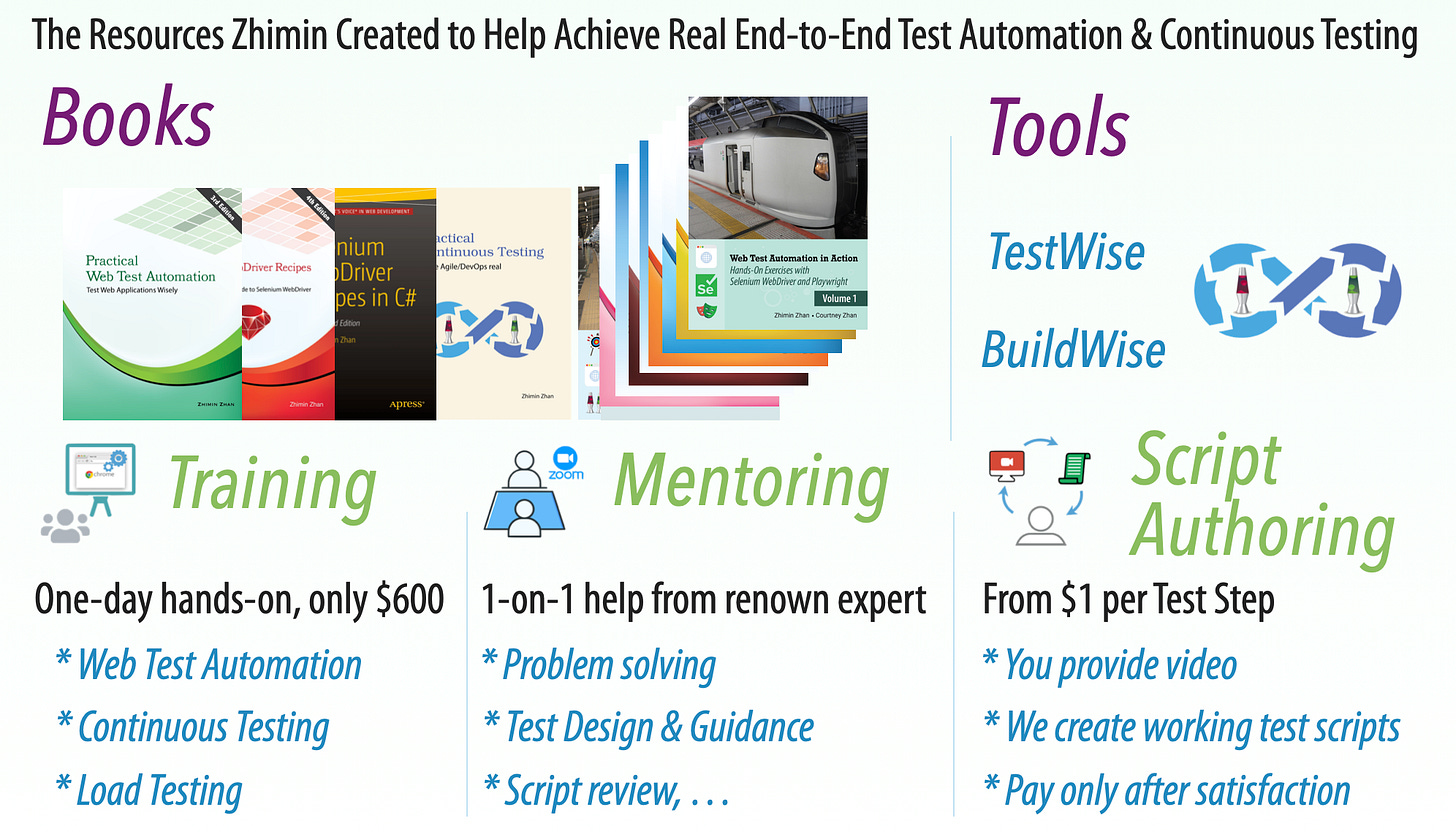

My father’s new book: "End-to-end Test Automation Anti-Patterns"