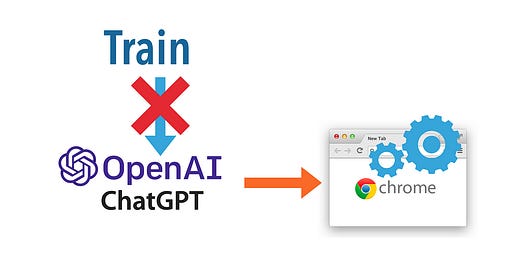

Why Training ChatGPT Won’t Get Good Results in Test Automation?

Most likely, a total waste of time.

My article “ChatGPT is Useless for Real Test Automation” was a hit (many reads), with mixed feedback. A common one is “ChatGPT is still in its early version, AI will improve, you can train it yourself”. This might sound about right, but there are several wrong or incorrect assumptions with this short statement.

Table of Contents:

∘ 1. AI is not new, and test automation engineers are not researchers

∘ 2. Most Test Automation Engineers are no good, how can they train bots?

∘ 3. More Training ChatGPT may lead to more wrong results

∘ 4. We are software testers, not trainers! We have tasks to complete at work.

1. AI is not new, and test automation engineers are not researchers

Artificial intelligence is not new. The history of AI shows that it started in 1956, but it was never made for proper day-to-day use in software development. I know the recent advancements in machine learning. Yes, it may succeed in test automation, but it may also fail (like before, at least two waves). Unless you are a researcher (I was for 3.5 years), you cannot bet your daily work on a possibility, right?

The term test automation infers “Objectiveness” and “Quick”. If someone can use AI to do end-to-end testing daily and provide value to the team, then it is fine. If someone claims “test automation with AI, e.g. ChatGPT, is better”, prove it, objectively and quickly.

Here, I want to highlight an important Agile term “timebox”. Engineers in software teams are not supposed to play/research with a new toy at work for months. In my opinion, the maximum time (for the timebox) is one day (or 8 hours) in test automation.

TimeBox in Agile. A timebox is a time limit placed on a task or activity.

Some might argue, “that’s too short!”.

Not really, think about it, the web (defined by W3C) has not changed much for over two decades, moreover, web testing skills are fully transferable between jobs. I have been successfully testing numerous websites, using the same tech stack: raw Selenium WebDriver + RSpec (completely free and open-source). For a real senior test automation engineer, the timebox should be in a matter of minutes. Check out my article, How do I Start Test Automation on Day 1 of Onboarding a Software Project?

Not just me, my young daughter could accomplish that too. She wrote this article, “Set up, Develop Automated UI tests and Run them in a CT server on your First day at work”, on her first intern job.

So, putting the argument aside, such as AI testing is better or not, do it real with proof. If my daughter (20-year-old on her first job) could deliver real useful automated tests on Day 1 (and maintained them running daily) by hand-scripting raw Selenium WebDriver, I expect ‘your better AI-assisted approach’ would yield much better results.

By the way, My daughter’s level is not that good. By my assessment, she is at Level 2.5 of AgileWay Continuous Testing Grading.

Also, creating automated tests efficiently (like my daughter) is far not enough, as Test Creation Only Accounts for ~10% of Web Test Automation Efforts.

2. Most Test Automation Engineers are no good, how can they train bots?

In AI, there is a term “Expert System”, which emulates the decision-making ability of a human expert. Common sense, the trainer should have a good knowledge of this field to train others. However, real test automation engineers are extremely rare!

“In my experience, great developers do not always make great testers, but great testers (who also have strong design skills) can make great developers. It’s a mindset and a passion. … They are gold”.

- Google VP Patrick Copeland, in an interview (2010)“95% of the time, 95% of test engineers will write bad GUI automation just because it’s a very difficult thing to do correctly”.

- this interview from Microsoft Test Guru Alan Page (2015)“Testing is harder than developing. If you want to have good testing you need to put your best people in testing.”

- Gerald Weinberg, in a podcast (2018)

You can self-assess with the Definition of End-to-End Test Automation Success, or “One Simple Test Automation Scenario Interview Question that Most Candidates Failed”. For the interviews I conducted over the years, no single automated tester job candidate answered this simple question right. Really, there was no excuse for it.

From my observation over 15 years, most (>90%) of the so-called “Automated Testers” or “Senior SDET” are Level 0 or 1 of the AgileWay Continuous Testing Grading.

A Level 0 or 1 to train a Bot, what will be the result?

3. More Training ChatGPT may lead to more wrong results

A typical machine learning system is to refine the results based on feedback. This is OK for some advisory domains, but not in Test Automation, where most “most automated testers” don’t even know what a good and maintainable test script is like.

“Unmaintainable automated test scripts are useless.” — Zhimin Zhan

Keep reading with a 7-day free trial

Subscribe to The Agile Way to keep reading this post and get 7 days of free access to the full post archives.