Why Recording Videos for Automated Test Execution in Test Scripts is Wrong?

Avoid capturing automated E2E test execution into video, as it is a waste of time. Promoting video reporting is a sign of ‘fake test automation engineers’.

This article is one of the “Be aware of Fake Test Automation/DevOps Engineers” series.

In Automated End-to-End Testing, many so-called ‘cool’ features like the ones below are mostly unnecessary.

Record-n-Playback (coming soon)

Recording test execution (video/screenshots)

Some commercial test automation tools, such as Ranorex, highlight a ‘cool’ feature: capturing the whole test execution video (also known as video-reporting). A variation of this feature is taking a sequence of screenshots after each test step. These so-called test automation tools all failed.

Recently, I saw a couple of articles comparing Selenium WebDriver vs Playwright. The authors claimed one main Playwright’s advantage (over Selenium) is Video-Reporting. This is very wrong! (as a web test automation framework, raw Selenium WebDriver is much much better than Playwright, in every way).

WARNING: bad! set up recording in Playwright test scripts.# taken from PlayWright doc: https://playwright.dev/docs/videos

const context = await browser.newContext({

recordVideo: {

dir: 'videos/',

size: { width: 640, height: 480 },

}

});I understand the above is optional. People, who think this feature is very helpful for developing/debugging automated tests, do not understand test automation, they are fakes or amateurs.

Table of Contents:

· Real Test Automation Engineers do not have time to check videos

· Video/Screenshots reporting is tempting, but wrong

· Video/Screenshots reporting is bad

∘ 1. Counter-productive to resolve the issue

∘ 2. Slower test execution

∘ 3. Disk space, Cost and other complications

· If you do need Video reporting, there is a free and simpler wayReal Test Automation Engineers do not have time to watch/check videos

Real test automation engineers are capable of developing/maintaining a large automated E2E test suite (which runs frequently as a part of the Continuous Testing process). For example, the automated regression suite of my WhenWise app has 548 raw Selenium tests. Upon a green build (passing all these End-to-End user-story level tests), I push the build to production.

If all these tests were run on one build machine, it would take 3.5 hours. By running them in the BuildWise CT server with 7 build agents, the total execution time is reduced to 36 minutes.

As we know, getting a green build on a large (>200, Level 3 of AgileWay Continuous Testing Grading) automated E2E test suite is not easy, as a simple change to code or infrastructure might cause an unknown number of test failures.

For argument’s sake, let’s say, averagely speaking, a 15% failure rate for a failed build. Then, the time (non-stop) for watching the video of failed test execution :

3.5 hours * 0.15 = 31.5 minutesThis does not include the time for :

downloading

analysing

re-watching (rewind, forward, pause)

human rest time

So, we are talking about 1 hour or more. Please note, this is just for watching the videos (for one time) — no work on resolving test failures yet.

That’s too long. For WhenWise (and my other web apps), I usually expect to release to production multiple times of day, if any change is made.

Test automation engineers are not police detectives, who can afford to spend hours watching CCTV surveillance videos again and again. The application under testing change frequently, we need to respond test failures quickly.

So, in my opinion, anyone claiming video reporting is useful are fake test automation engineers (like the one in this real story), who never worked on a single real successful test automation project.

Video/Screenshots reporting is tempting, but wrong

I admit that video/screenshot reporting looks good in demonstrations. I made this mistake myself before, deeper than most PlayWright testers: I implemented screenshot reporting in my tool: TestWise (v1). At that time, I thought it might be a good idea, influenced by some commercial test automation tools. Very soon, I realized it was no use and dropped this feature.

I met a few ‘test automation engineers’ promoting video-reporting, and each of them later turned out to be a fake. If you think about it, this is quite obvious. Most demonstrations of video-reporting, just like the use of recorders, are simple and short examples. The presenters know exactly the position of the video that requires attention. However, it is quite different in real life. Execution of a typical enterprise E2E test execution often exceeds 5 minutes, and seeking the relevant information takes time. Now, try to multiply the test count; it will cost a huge amount of time to look after even 50 tests (Level 2 of AgileWay Continuous Testing Grading). This means these ‘fake test engineers’ only worked on a handful of test scripts that were only slightly more complex than login/logout test cases.

Some might say: “I sometimes do need a video of automated test execution”. Yes, you can, do it when there is a need (see the bottom section on how). Doing it every time is totally unnecessary. Remember, as a real test automation engineer, the top priority is to keep all automated tests valid, i.e. resolving failed tests efficiently.

Video/Screenshots reporting is bad

The short answer: video (and/or screenshots) capturing the whole test execution is unnecessary; most of the time, you only need to take a screenshot of the app when a test failure occurs. It is worse than unnecessary for the reasons below:

Counter-productive to resolving the issue

Slower test execution

Disk space, Cost and other complications

1. Counter-productive to resolving the issue

More importantly, Video/Screenshots reporting does not fit Automated E2E test automation. Try to answer this question: “What are the fundamental reasons for video reporting?” The typical answer: “To find out why the test failed, and then fix it”. I will show you a much better way to achieve the goal, without video-reporting.

The truth is that: Video reporting is Counter-Productive. I say this as I have been able to develop and maintain these regression test suites:

ClinicWise (Web app), 611 raw Selenium tests, since 2013–03

TestWise (Desktop), 307 raw Appium tests, since 2019–07

WhenWise (Web app), 548 raw Selenium tests, since 2018–01

(I still have a few smaller test suites for SupportWise, BuildWise, SiteWise and TestWisely)

Furthermore, I maintained all the above in my spare time. If I had adopted Video reporting, I could not handle even one regression suite. Why? It is so inefficient due to the nature of Automated UI Testing. I needed to find out the cause (for test failure) and fix it very quickly, usually < 1 minute (excluding test execution time). Viewing, skipping, rewinding, or forwarding a video is a big time-waster, definitely would fail for the size of my regression test suites.

When a test fails, it can be one of the three main reasons:

Regression error (tests are fine, the app changed or bug introduced)

Test Scripts are wrong (the app is fine); a test-data issue is also a test script error.

Infrastructure error (app and tests are fine)

Either way, what are the most natural and efficient (important) steps to resolve?

The below is mine:

Inspect the screenshot of the app (in the browser) when the error occurred

Examine the error stack trace in test scripts.

Apply a fix

Rerun the test script, locally

If resolved, commit your fix, and trigger another run in the CT server; If not, go back to Step 1.

It is quite logical, isn’t it? (if engineers are not contaminated by a specific tool) Let’s see an example.

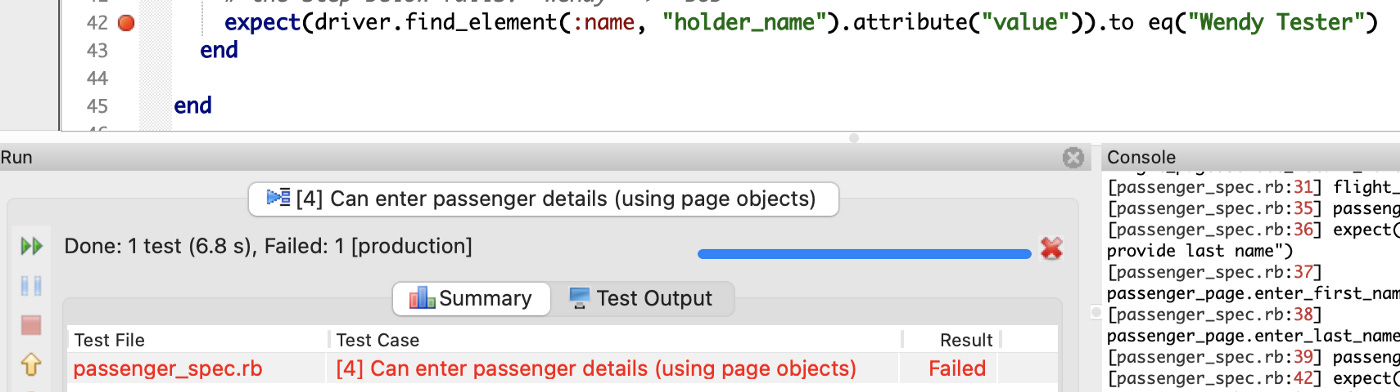

One test failed in a CT run, as the build report shown in the CT Server (this case, BuildWise CT Server). Firstly, I quickly skim the test case name, then click the screenshot icon (camera icon).

If the engineer is familiar with the test scenario, they might have a fair idea about the cause.

Click the ‘Test Output’ tab to view the error message and its stack trace.

For this simple example, it is obvious that the failure is on the assertion.

Click the “Copy error line to Clipboard” button. Switch to TestWise IDE, paste it into the “Go to File” dialogue and navigate to the line in the test script file.

Just run this test case. (In TestWise, the browser will be kept open in this execution mode)

Refresh your memory about this test case by reading the scripts during the execution. The test failed as expected; check the web page in the browser.

In this case, it is quite clear that the cause is a wrong assertion in the test script. Fix the test step.

Just run this step in TestWise Debugging mode (this saves time, no need to start from the beginning). Modify the test step and run this step again. The test step now passed.

Rerun the (fixed) test case.

Now check in (Git commit, then push) the test script and trigger another run in the CT server.

It is much quicker when doing it, excluding execution time, which usually takes me seconds. Now you see why downloading/watching videos is a waste of time.

More importantly, rhythm is essential for real test automation engineers. The above bug-resolving steps are natural and in a good rhythm. Each debugging step has quick feedback. Watching captured screenshots/videos break that rhythm.

2. Slower test execution

Any extra activity, such as capturing screenshots/videos, comes with a cost. Fast execution time is an important factor in automated End-2-End testing. We want to provide quick feedback to the team.

Take WhenWise’s regression test suite as an example: If 5% is the overhead for saving screenshots, I will need to wait extra 10 minutes (if running on a single machine) for feedback. Putting aside some imaginary benefits (which do not exist), capturing test execution videos slows the build process.

3. Disk space, Cost and other complications

As we know, video files are large. Frequent test executions (if CT is done properly, very few can achieve that, though) means space will be filled up on the disk drive quickly.

Sometimes I run 5 builds a day for a large test suite (500+ Selenium tests).

Naturally, the cost issue comes up. However, the situation is worse than just wasting money. A few years ago, the servers (set up by so-called DevOps engineers) at a large company were frequently inaccessible. The reason, the video-reporting files gradually ate up the disk space (in a docker image). Somehow, it affected our test servers. “DevOps engineers” came up with a workaround script to periodically delete those video files. What a mess!

By the way, no one was looking at the captured automated test execution report. I remembered the test suite only had ~20 Gauge tests, and more than half failed every day. The automated engineer and other team members did not care at all. I quickly converted tests to into raw Selenium WebDriver + RSpec, and ran the whole regression suite (growing rapidly) in a BuildWise much more frequently. Of course, no recording test executions.

If you do need Video reporting, there is a free and simpler way

Just run your automated tests in a Virtual Machine, which is a common approach. VM software usually comes with a recording feature. Nowadays, people are getting used to video conferencing tools such as Zoom and MS Teams, both offer screen recordings. So, there is no need to pay for (or use) an Automation Tool to do the recording.