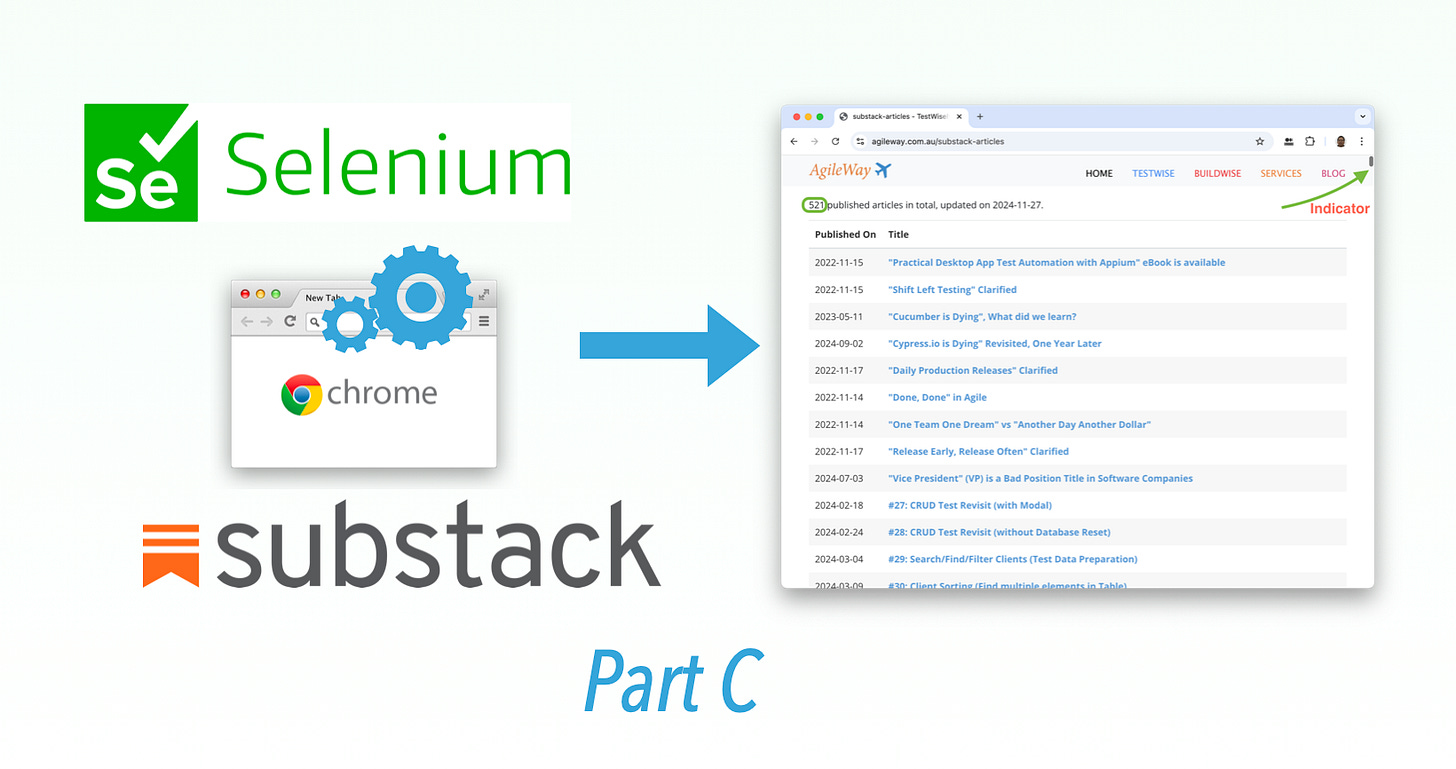

Case Study: Extract All Substack Article Titles and Links. Part C: Extract All

Handling Pagination.

This article series:

Part E: Annotation by Zhimin Zhan*

(offering valuable tips for test automation engineers to level up their skills, exclusively available on Substack)

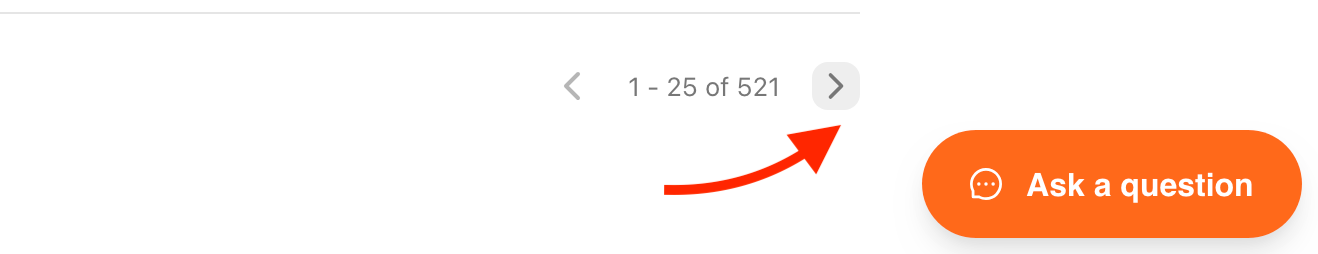

After Part B, I got all 25 article data from the first page in a proper CSV file.

Extract All 500+ Articles Out

Let’s focus on extracting the 2nd page’s articles first.

Clicking the "Next Page” button.

driver.action.scroll_by(0, 2500).perform # to the bottom

next_button_xpath = ".../button[2]" # hide xpath intentionally

next_page_btn = driver.find_element(:xpath, next_button_xpath)

next_page_btn.click

sleep 2Then, the core operations remain the same except we are going to save to a different CSV file. I added a page sequence number to the generated CSV File names.

page = 1

# ...

# after extract all article on the page, data stored in story_data[]

page += 1

page_seq = page.to_s.rjust(2, "0")

csv_file = File.join(File.dirname(__FILE__), "..", "substack-published-articles-#{page_seq}.csv")

# ...Rerun the special debugging test in TestWise. We get the file substack-published-articles-#{page_seq}.csv".

The file looks good!

Looping until the last page

We are on page 2, by calculation, there are still 19 pages to go. Adding a loop into the script.

page = 2

19.times do |y|

puts y

story_data = []

#...

endI did not expect it to run smoothly on the first go, after all, it is an automation script, I didn’t do stabilisation and waits. As expected, on “151-175” out of 521, I got a test execution failure.

But it has processed 4 pages successfully, and running fine for over 4 minutes.

The reason for failure:

Selenium::WebDriver::Error::NoSuchElementError:

no such element: Unable to locate element: {"method":"xpath","selector":"//h3[@class='subtitle']"}This is because, for one of the articles, there was NO subtitle. An easy fix:

subtitle = driver.find_element(:xpath, "//h3[@class='subtitle']").text rescue ""(Ruby, what a great scripting language!)

I wanted to continue, not starting from page 1.

Navigate to the previous page (126-150)

Update the script with the correct starting page number.

page = 6

16.times do |y|

...

endRerun the script in TestWise.

It went all smoothly for ~15 minutes.

Patching

By examining the generated CSV files (21 in total), I noticed there two abnormalities: Pages 09 and 11. They were too small.

This could be opening the page but the script did not wait the fully loaded.

To patch (re-extract pages 09 and 11). Manually navigate to page 8 (176-200). Then update the test script as below.

page = 8

3.times do |y|

# ...

endThis runs for 3 minutes, generating “09”, “10” and “11”. I only needed two.

OK, with just two executions (and sp,e minor patches to the automation script), I got the full 21 CSV files containing all the article data to generate the full article list page.